(This gets very esoteric very quickly)

Those of you paying attention may have realised that very recently (January this year), browsers started complaining about security when connecting to sites whose SSL certificates used the SHA-1 hashing algorithm within the certificate. This was due to a theoretical weakness in the algorithm known about as far back as 2005.

What has changed since then is that Google researchers have now demonstrated the attack, and whilst it is not practicable (with the possible exception of nation state attackers), it is now well past time that SHA-1 was gracefully retired. Especially when you consider that a methodology that is not sensibly practicable today may well be usable in 5-10 years.

SHA-1 is a cryptographic hashing algorithm whereby any individual lump of data can be uniquely expressed with a single hash and no other lump of data can share that hash value. Or more precisely it is difficult to generate a collision whereby two lumps of data hash to the same value. If you run a SHA-1 tool against a file, it should return a unique value unless the file is identical :-

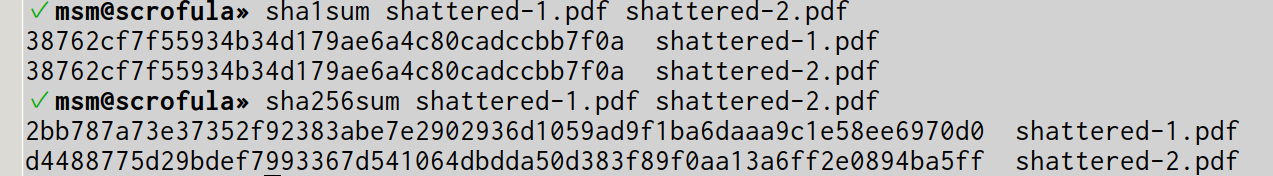

The first command shows incorrect behaviour whereby two different files result in identical hash values; the second command shows the correct behaviour demonstrating that the files contain different contents.

In practice, an attacker would have to produce a lump of data that generates the same SHA-1 hash value as a the lump of data that she wanted to ‘impersonate’, which has not been demonstrated. Google’s researchers have simply generated two lumps of data which generate the same SHA-1 hash value … which is somewhat easier.

Cryptographic hash functions are used as a building block to build secure cryptography, and using a weak hashing algorithm will fundamentally result in less secure cryptography.